Universal Gestures for Human Robot Interaction (GESTIBOT): Difference between revisions

No edit summary |

No edit summary |

||

| Line 6: | Line 6: | ||

This work is framed in the Humanoid Robotics Research area of the Computer and Robot Vision Lab (Vislab), ISR/IST. | |||

Keywords: Computer Vision, Human Robot Interaction. | Keywords: Computer Vision, Human Robot Interaction, Gesture Recognition, Shared Attention. | ||

=== Objectives === | === Objectives === | ||

To develop vision based pattern recognition methods able of detecting some universal gestures with strong relevance for sharing attention between a Robot and a Human (e.g. waving, pointing, looking). | |||

=== Description === | === Description === | ||

Interaction methods between robots and humans has been classically addressed by using speech communication or predefined gestures, usually designed in an ad-hoc manner for expert users, i.e. users have to learn the association between the gestures and the meaning. However, more and more robots are aimed at being deployed in social and public environments, having to interact with non-expert users. In such cases, only universal gestures may be of relevance to consider. For instance, waving is interpreted in all societies as an attention trigger or an alerting mechanism. Also pointing (or looking) at a certain direction immediately drives the attention of the observer to the pointed (or looked at) direction. In this work we aim at implementing the skills in a humanoid robot to detect and produce gestures that convey implicit information to-from the user. | |||

Beyond robotics, the developed methods will also have an important impact in future interactive systems, e.g. a digital camera can focus on the waving person, and likely lead to commercial applications. | |||

=== Prerequisites === | === Prerequisites === | ||

| Line 25: | Line 27: | ||

=== Expected Results === | === Expected Results === | ||

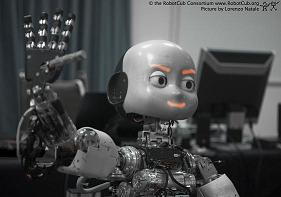

The algorithms will be implemented in the iCub | The developed algorithms will be implemented in the humanoid robotic platform iCub. In the end of the project it is expected to illustrate the work done through a demonstration where: | ||

1 - a person waves to the robot and attracts its attention (the robot looks at the person). | |||

2 - the person looks or points at an object and the robot detects which is the object of interest. | |||

3 - The robot looks and points to the same object. | |||

Some required tools for this work (e.g. person and waving detection software) are available in the research group. | |||

=== Related Work === | === Related Work === | ||

Waving detection using the local temporal consistency of flow-based features for real-time applications, Plinio Moreno, Alexandre Bernardino, and José Santos-Victor. Accepted at ICIAR 2009. | |||

Revision as of 14:15, 27 April 2009

- Orientador: Prof. Alexandre Bernardino

- Co-Orientador: Prof. José Santos Victor

- Acompanhante: Dr. Plinio Moreno

This work is framed in the Humanoid Robotics Research area of the Computer and Robot Vision Lab (Vislab), ISR/IST.

Keywords: Computer Vision, Human Robot Interaction, Gesture Recognition, Shared Attention.

Objectives

To develop vision based pattern recognition methods able of detecting some universal gestures with strong relevance for sharing attention between a Robot and a Human (e.g. waving, pointing, looking).

Description

Interaction methods between robots and humans has been classically addressed by using speech communication or predefined gestures, usually designed in an ad-hoc manner for expert users, i.e. users have to learn the association between the gestures and the meaning. However, more and more robots are aimed at being deployed in social and public environments, having to interact with non-expert users. In such cases, only universal gestures may be of relevance to consider. For instance, waving is interpreted in all societies as an attention trigger or an alerting mechanism. Also pointing (or looking) at a certain direction immediately drives the attention of the observer to the pointed (or looked at) direction. In this work we aim at implementing the skills in a humanoid robot to detect and produce gestures that convey implicit information to-from the user.

Beyond robotics, the developed methods will also have an important impact in future interactive systems, e.g. a digital camera can focus on the waving person, and likely lead to commercial applications.

Prerequisites

Average grade > 14. It is recommended a good knowledge of Signal and/or Image Processing, as well as Machine Learning.

Expected Results

The developed algorithms will be implemented in the humanoid robotic platform iCub. In the end of the project it is expected to illustrate the work done through a demonstration where: 1 - a person waves to the robot and attracts its attention (the robot looks at the person). 2 - the person looks or points at an object and the robot detects which is the object of interest. 3 - The robot looks and points to the same object.

Some required tools for this work (e.g. person and waving detection software) are available in the research group.

Related Work

Waving detection using the local temporal consistency of flow-based features for real-time applications, Plinio Moreno, Alexandre Bernardino, and José Santos-Victor. Accepted at ICIAR 2009.