Affordance imitation: Difference between revisions

Jump to navigation

Jump to search

Lmontesano (talk | contribs) No edit summary |

Lmontesano (talk | contribs) No edit summary |

||

| Line 18: | Line 18: | ||

* Query to Effect Detector. The main objective of this port is to start the tracker at the object of interest. We need to send at least: | * Query to Effect Detector. The main objective of this port is to start the tracker at the object of interest. We need to send at least: | ||

** position (x,y) within the image. 2 doubles. | |||

** size (h,w). 2 doubles. | |||

** color histogram. TBD. | |||

** saturation parameters (max min). 2 int. | |||

** intenity (max min). 2 int. | |||

* | * blobDescriptor -> query | ||

** Affordance descriptor. Same format as camshiftplus | |||

** tracker init data. histogram (could be different from affordance) + saturation + intensity | |||

* query -> object segmentation | |||

** vocab message: "do seg" | |||

* object segmentation -> blob descriptor | |||

** labelled image | |||

** raw image | |||

Revision as of 13:33, 29 July 2009

Modules

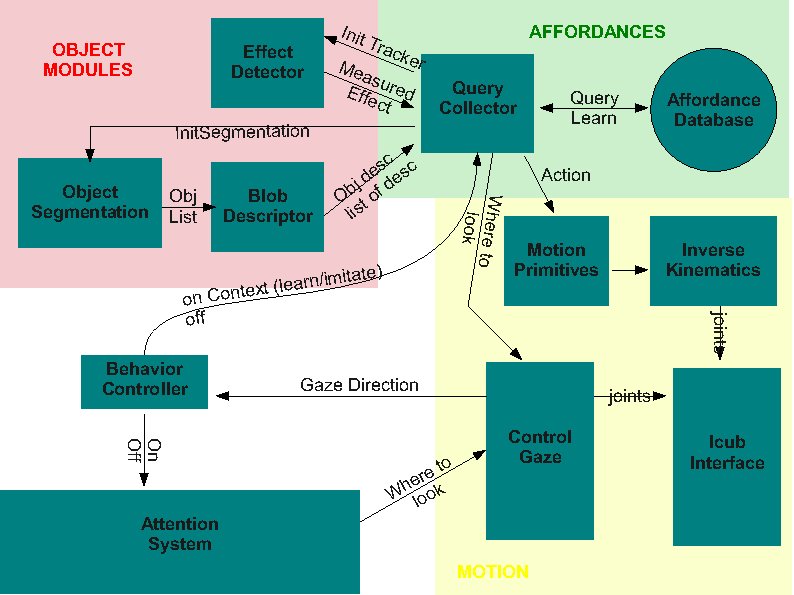

This is the general architecture currently under development

Ports and communication

The interface between modules is under development. The current version (subject to changes as we refine it) is as follows:

- Behavior to AttentionSelection -> vocabs "on" / "off"

- Behavior to Query -> vocabs "on" / "off"

We should add some kind of context to the on command (imitation or learning being the very basic).

- Query to Behavior -> "end" / "q"

- Query to Effect Detector. The main objective of this port is to start the tracker at the object of interest. We need to send at least:

- position (x,y) within the image. 2 doubles.

- size (h,w). 2 doubles.

- color histogram. TBD.

- saturation parameters (max min). 2 int.

- intenity (max min). 2 int.

- blobDescriptor -> query

- Affordance descriptor. Same format as camshiftplus

- tracker init data. histogram (could be different from affordance) + saturation + intensity

- query -> object segmentation

- vocab message: "do seg"

- object segmentation -> blob descriptor

- labelled image

- raw image