Affordance imitation: Difference between revisions

Jump to navigation

Jump to search

m (changed some formatting) |

(added a description of EffectDetector) |

||

| Line 30: | Line 30: | ||

* /affdescriptor:o | * /affdescriptor:o | ||

* /trackerinit:o | * /trackerinit:o | ||

== EffectDetector == | |||

Implemented by effectDetector module in the iCub repository.<br /> | |||

Algorithm: | |||

1.wait for initialization signal and parameters on /init | |||

2.read the raw image that was used for the segmentation on /rawsegmimg:i | |||

3.read the current image on /rawcurrimg:i | |||

4.check if the the ROI specified as an initialization parameter is similar in the two images | |||

5.if (similarity<threshold) | |||

6. answer 0 on /init and go back to 1. | |||

7.else | |||

8. answer 1 on /init | |||

9. while(not received another signal on /init) | |||

10. estimate the position of the tracked object | |||

11. write the estimate on /effect:o | |||

12. read a new image on /rawcurrimg:i | |||

13. end | |||

14.end | |||

Ports: | |||

* /init //receives a bottle with (u, v, w, h, h1, h2, ..., hn, vmin, vmax, smin), answers 0 or 1. | |||

* /rawsegmimg:i //raw image that was used for the segmentation | |||

* /rawcurrimg:i | |||

* /effect:o //flow of (u,v) positions of the tracked object | |||

* /error:o //signals if the module is working correctly (0) or if there is some problem (1..) | |||

= Ports and communication = | = Ports and communication = | ||

Revision as of 18:56, 9 November 2009

Modules

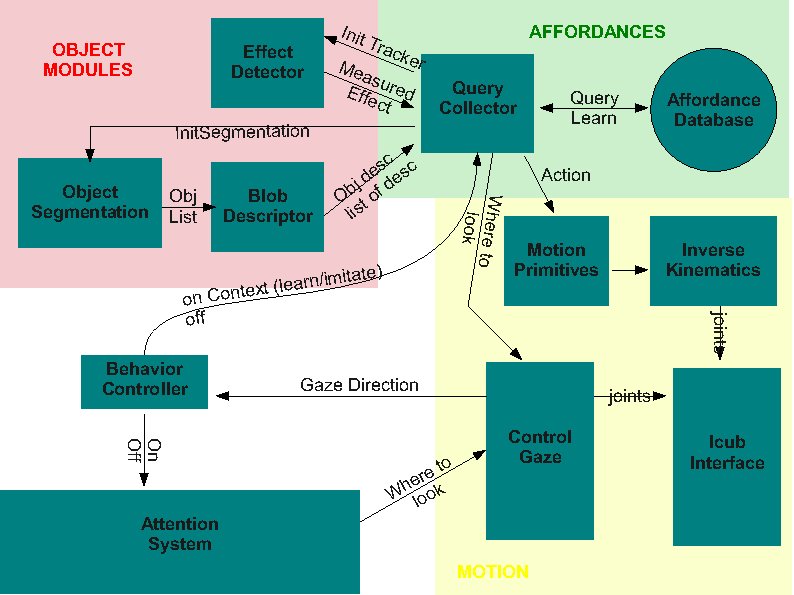

This is the general architecture currently under development

BlobSegmentation

Implemented by edisonSegmentation module in the iCub repository. Module that takes a raw RGB image as input and provides a segmented (labeled) image at the output, indicating possible objects or object parts present in the scene.

Ports:

- /conf

- /rawimg:i

- /rawimg:o

- /labelimg:o

- /viewimg:o

BlobDescriptor

Implemented by blobDescriptor module in the iCub repository. Module that receives a labeled image and the corresponding raw image and creates descriptors for each one of the identified objects.

Ports:

- /conf

- /rawimag:i

- /labelimg:i

- /rawimg:o

- /viewimg:o

- /affdescriptor:o

- /trackerinit:o

EffectDetector

Implemented by effectDetector module in the iCub repository.

Algorithm:

1.wait for initialization signal and parameters on /init 2.read the raw image that was used for the segmentation on /rawsegmimg:i 3.read the current image on /rawcurrimg:i 4.check if the the ROI specified as an initialization parameter is similar in the two images 5.if (similarity<threshold) 6. answer 0 on /init and go back to 1. 7.else 8. answer 1 on /init 9. while(not received another signal on /init) 10. estimate the position of the tracked object 11. write the estimate on /effect:o 12. read a new image on /rawcurrimg:i 13. end 14.end

Ports:

- /init //receives a bottle with (u, v, w, h, h1, h2, ..., hn, vmin, vmax, smin), answers 0 or 1.

- /rawsegmimg:i //raw image that was used for the segmentation

- /rawcurrimg:i

- /effect:o //flow of (u,v) positions of the tracked object

- /error:o //signals if the module is working correctly (0) or if there is some problem (1..)

Ports and communication

The interface between modules is under development. The current version (subject to changes as we refine it) is as follows:

- Behavior to AttentionSelection -> vocabs "on" / "off"

- Behavior to Query -> vocabs "on" / "off". We should add some kind of context to the on command (imitation or learning being the very basic).

- Gaze Control -> Behavior: read the current head state/position

- Query to Behavior -> "end" / "q"

- Query to Effect Detector. The main objective of this port is to start the tracker at the object of interest. We need to send at least:

- position (x,y) within the image. 2 doubles.

- size (h,w). 2 doubles.

- color histogram. TBD.

- saturation parameters (max min). 2 int.

- intensity (max min). 2 int.

- Effect Detector to Query

- Camshiftplus format

- blobDescriptor -> query

- Affordance descriptor. Same format as camshiftplus

- tracker init data. histogram (could be different from affordance) + saturation + intensity

- query -> object segmentation

- vocab message: "do seg"

- object segmentation -> blob descriptor

- labelled image

- raw image