Affordance imitation: Difference between revisions

(minor fixes) |

|||

| Line 7: | Line 7: | ||

= Application Organization = | = Application Organization = | ||

The application folder is in $ICUB_ROOT/app/demoAffv2. | The application folder is in <code>$ICUB_ROOT/app/demoAffv2</code>: XML scripts and .ini config files are there, in subdirectories <code>scripts/</code> and <code>conf/</code> respectively. | ||

Currently existing .ini configuration files: | |||

Currently existing files: | |||

* edisonConfig.ini | * edisonConfig.ini | ||

** configuration file for the blob segmentation module. | ** configuration file for the blob segmentation module. | ||

Currently existing XML files: | |||

- | |||

== affActionPrimitives == | == affActionPrimitives == | ||

In November 2009, Christian, Ugo and others began the development of a module containing affordances primitives, called '''affActionPrimitives''' in the iCub repository. | In November 2009, Christian, Ugo and others began the development of a module containing affordances primitives, called '''affActionPrimitives''' in the iCub repository. This module relies on the ICartesianControl interface, hence to compile it you need to enable the module icubmod_cartesiancontrollerclient in CMake while compiling the iCub repository, and to make this switch visible you have to tick the USE_ICUB_MOD just before. | ||

in CMake while compiling the iCub repository, and to make this switch visible you have to tick the USE_ICUB_MOD just before. | |||

Attention: '''perform CMake configure/generate operations twice''' in a row prior to compiling the new module, due to a CMake bug; moreover, do not remove the CMake cache between the first and the second configuration. | Attention: '''perform CMake configure/generate operations twice''' in a row prior to compiling the new module, due to a CMake bug; moreover, do not remove the CMake cache between the first and the second configuration. | ||

| Line 28: | Line 26: | ||

== BlobSegmentation == | == BlobSegmentation == | ||

Implemented by edisonSegmentation module in the iCub repository. | Implemented by '''edisonSegmentation''' module in the iCub repository. | ||

Module that takes a raw RGB image as input and provides a segmented (labeled) image at the output, indicating possible objects or object parts present in the scene. | Module that takes a raw RGB image as input and provides a segmented (labeled) image at the output, indicating possible objects or object parts present in the scene. | ||

| Line 54: | Line 52: | ||

yarp connect /edisonSegm/rawimg:o /raw | yarp connect /edisonSegm/rawimg:o /raw | ||

yarp connect /edisonSegm/viewimg:o /view | yarp connect /edisonSegm/viewimg:o /view | ||

Video file [[ Media:Segm_test_icub.avi | segm_test_icub.avi ]] is a sequence with images taken from the icub with colored objects in front and can be downloaded here. | Video file [[ Media:Segm_test_icub.avi | segm_test_icub.avi ]] is a sequence with images taken from the icub with colored objects in front and can be downloaded here. | ||

| Line 60: | Line 57: | ||

== BlobDescriptor == | == BlobDescriptor == | ||

Implemented by blobDescriptor in the iCub repository. | Implemented by '''blobDescriptor''' in the iCub repository. | ||

Module that receives a labeled image and the corresponding raw image and creates descriptors for each one of the identified objects. | Module that receives a labeled image and the corresponding raw image and creates descriptors for each one of the identified objects. | ||

| Line 116: | Line 113: | ||

== QueryCollector == | == QueryCollector == | ||

Implemented by | Implemented by demoAffv2 module in the iCub repository. | ||

Module that receives inputs from the object descriptor module and the effect descriptor. When activated by the behavior controller, info about the objects is used to select actions that will be executed by the robot. In the absence of interaction activity, it notifies it to the behavior controller that may decide to switch to another behavior. | Module that receives inputs from the object descriptor module and the effect descriptor. When activated by the behavior controller, info about the objects is used to select actions that will be executed by the robot. In the absence of interaction activity, it notifies it to the behavior controller that may decide to switch to another behavior. | ||

Revision as of 18:56, 9 December 2009

Modules

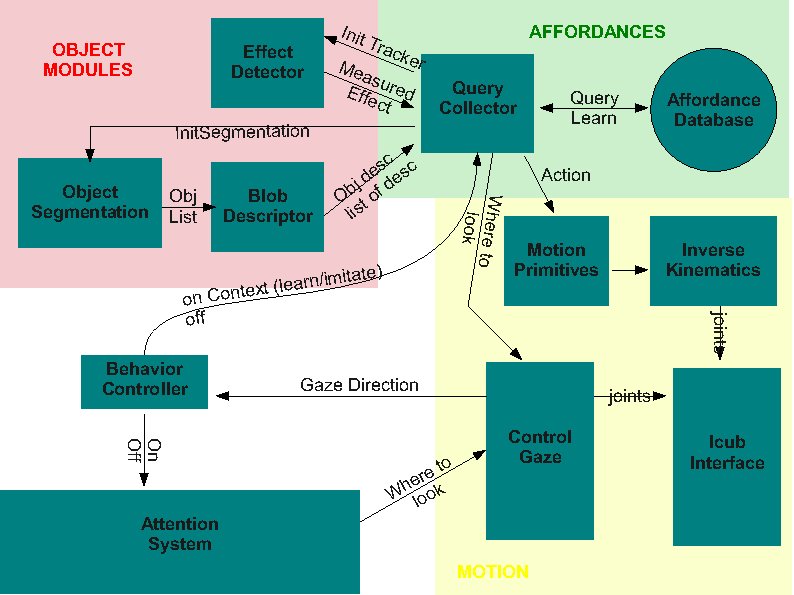

This is the general architecture currently under development

Application Organization

The application folder is in $ICUB_ROOT/app/demoAffv2: XML scripts and .ini config files are there, in subdirectories scripts/ and conf/ respectively.

Currently existing .ini configuration files:

- edisonConfig.ini

- configuration file for the blob segmentation module.

Currently existing XML files: -

affActionPrimitives

In November 2009, Christian, Ugo and others began the development of a module containing affordances primitives, called affActionPrimitives in the iCub repository. This module relies on the ICartesianControl interface, hence to compile it you need to enable the module icubmod_cartesiancontrollerclient in CMake while compiling the iCub repository, and to make this switch visible you have to tick the USE_ICUB_MOD just before.

Attention: perform CMake configure/generate operations twice in a row prior to compiling the new module, due to a CMake bug; moreover, do not remove the CMake cache between the first and the second configuration.

To enable the cartesian features on the PC104, you have to launch iCubInterface pointing to the iCubInterfaceCartesian.ini file. Just afterwards you need to launch the solvers through the Application Manager XML file located under $ICUB_ROOT/app/cartesianSolver/scripts. PC104 code requires to be compiled with option icubmod_cartesiancontrollerserver active; but the iCubLisboa01 is already prepared.

BlobSegmentation

Implemented by edisonSegmentation module in the iCub repository. Module that takes a raw RGB image as input and provides a segmented (labeled) image at the output, indicating possible objects or object parts present in the scene.

Ports:

- /conf

- configuration

- /rawimg:i

- input original image (RGB)

- /rawimg:o

- output original image (RGB)

- /labelimg:o (INT)

- segmented image with the labels

- /viewimg:o

- Segmented image with the colors models for each region (good to visualize)

Check full documentation at the official iCub Software Site

Example of application:

edisonSegmentation.exe --context demoAffv2/conf yarpdev --device opencv_grabber --movie segm_test_icub.avi --loop --framerate 0.1 yarpview /raw yarpview /view yarp connect /grabber /edisonSegm/rawimg:i yarp connect /edisonSegm/rawimg:o /raw yarp connect /edisonSegm/viewimg:o /view

Video file segm_test_icub.avi is a sequence with images taken from the icub with colored objects in front and can be downloaded here.

BlobDescriptor

Implemented by blobDescriptor in the iCub repository. Module that receives a labeled image and the corresponding raw image and creates descriptors for each one of the identified objects.

Ports (not counting the prefix /blobDescriptor):

/rawImg:i/labeledImg:i/rawImg:o/viewImg:o- image with overlay edges/affDescriptor:o/trackerInit:o- colour histogram and parameters that will serve to initialize a tracker, e.g., CAMSHIFT

EffectDetector

Implemented by effectDetector module in the iCub repository.

Algorithm:

1.wait for initialization signal and parameters on /init 2.read the raw image that was used for the segmentation on /rawsegmimg:i 3.read the current image on /rawcurrimg:i 4.check if the the ROI specified as an initialization parameter is similar in the two images 5.if (similarity<threshold) 6. answer 0 on /init and go back to 1. 7.else 8. answer 1 on /init 9. while(not received another signal on /init) 10. estimate the position of the tracked object 11. write the estimate on /effect:o 12. read a new image on /rawcurrimg:i 13. end 14.end

Ports:

- /init //receives a bottle with (u, v, width, height, h1, h2, ..., h16, vmin, vmax, smin), answers 1 for success or 0 for failure.

- /rawSegmImg:i //raw image that was used for the segmentation

- /rawCurrImg:i

- /effect:o //flow of (u,v) positions of the tracked object

Example of application:

yarpserver

effectDetector

yarpdev --device opencv_grabber --name /images

yarp connect /images /effectDetector/rawcurrimg yarp connect /images /effectDetector/rawsegmimg

yarp write ... /effectDetector/init #type this as input: 350 340 80 80 255 128 0 0 0 0 0 0 0 0 0 0 0 0 0 255 0 256 150

yarp read ... /effectDetector/effect #show something red to the camera, to see something happening.

QueryCollector

Implemented by demoAffv2 module in the iCub repository. Module that receives inputs from the object descriptor module and the effect descriptor. When activated by the behavior controller, info about the objects is used to select actions that will be executed by the robot. In the absence of interaction activity, it notifies it to the behavior controller that may decide to switch to another behavior.

Ports:

- /demoAffv2/effect

- /demoAffv2/synccamshift

- /demoAffv2/objsdesc

- /demoAffv2/

- /demoAffv2/motioncmd

- /demoAffv2/gazecmd

- /demoAffv2/behavior:i

- /demoAffv2/behavior:o

- /demoAffv2/out

- /demoAffv2/out

Ports and communication

The interface between modules is under development. The current version (subject to changes as we refine it) is as follows:

- Behavior to AttentionSelection -> vocabs "on" / "off"

- Behavior to Query -> vocabs "on" / "off". We should add some kind of context to the on command (imitation or learning being the very basic).

- Gaze Control -> Behavior: read the current head state/position

- Query to Behavior -> "end" / "q"

- Query to Effect Detector. The main objective of this port is to start the tracker at the object of interest. We need to send at least:

- position (x,y) within the image. 2 doubles.

- size (h,w). 2 doubles.

- color histogram. TBD.

- saturation parameters (max min). 2 int.

- intensity (max min). 2 int.

- Effect Detector to Query

- Camshiftplus format

- blobDescriptor -> query

- Affordance descriptor. Same format as camshiftplus

- tracker init data. histogram (could be different from affordance) + saturation + intensity

- query -> object segmentation

- vocab message: "do seg"

- object segmentation -> blob descriptor

- labelled image

- raw image