ICub instructions: Difference between revisions

m (→Setup: rephrase) |

(→Interactive Objects Learning Behavior: update to 2014 setup (Lua)) |

||

| Line 194: | Line 194: | ||

=== Interactive Objects Learning Behavior === | === Interactive Objects Learning Behavior === | ||

For this demo, you also need a Windows machine to run the | For this demo, you also need a Windows machine to run the speech recognition module. The 17" Tsunami laptop is already configured for this purpose. | ||

In Linux: | In Linux: | ||

| Line 202: | Line 202: | ||

Turn on the Windows machine. On startup, it will launch a command prompt with a <code>yarprun</code> listener. | Turn on the Windows machine. On startup, it will launch a command prompt with a <code>yarprun</code> listener. | ||

Back to the Linux machine, in the yarpmanager | Back to the Linux machine, in the yarpmanager "Interactive Objects Learning Behavior with SCSPM" panel: refresh, run, connect. | ||

The grammar of recognized spoken sentences is located at | |||

https://github.com/robotology/iol/blob/master/app/lua/verbalInteraction.txt | |||

The grammar of recognized spoken sentences is located at | |||

=== Yoga === | === Yoga === | ||

Revision as of 14:30, 3 March 2015

This article explains how to manage Chico (iCubLisboa01) for demos and experiments alike. We will describe the hardware setup that accompanies our iCub, how to turn things on and off, and how to run demos. For a generic description of this robot, refer to the Chico article.

An older version of this article can be found at iCub demos/Archive and Innovation Days 2009.

Setup

The inventory consists of:

| machine | notes | IP address, username |

|---|---|---|

| Chico the robot (duh) | has a pc104 CPU in its head | 10.10.1.50, icub |

| iCub laptop | used to control the robot; also, it serves NFS volumes with other machines | 10.10.1.53, icub |

Below Chico's support, from top to bottom we have:

| what | notes |

|---|---|

| Xantrex XFR 35-35 | thin power supply unit, to power pc104 and some motors. Voltage: 12.9, current: 05.0 or more |

| Xantrex XFR 60-46 | thick power supply unit, to power most motors. Voltage: 40.0 (initially it is 0 - it changes when you turn the green motor switch), current: 10.0 or more |

| APC UPS | uninterruptible power supply |

Note: the iCubBrain chassis, which contains two servers used for computation (icubbrain1 - 10.10.1.41, icubbrain2 - 10.10.1.42) is normally in the ISR server room on the 6th floor. When we bring the iCub to external demos, it sits on top of the power supply units.

Switching on the robot

Hardware side

- Check that the UPS is on

- Turn on the iCub laptop

- Turn on the Xantrex power supply units; make sure the voltage values are correct (see iCub_demos#Setup)

- Check that the two iCubBrain servers are on (if the demo is held at ISR then they are already on: ignore this step)

- Check that the red emergency button is unlocked

- Turn on the green switches behind Chico

- Safety hint: first turn on the pc104 CPU switch, wait for the CPU to be on, and only then switch the motors on

- Second safety hint, after turning on motors: wait for the four purple lights on each board to turn off and become two blue lights – at this point you can continue to the next steps

Software side

- Run cluster_manager.sh from the laptop desktop icon and start the needed YARP components (click here for detailed instructions). The summary is:

- First, always launch

yarpserverwith the 'Run' button in the Nameserver panel - Then, if your application employs machines other than the laptop itself, launch the necessary

yarprunlisteners (usually on icub-laptop, pc104, icubbrain1, icubbrain2)

- First, always launch

- Launch the yarpmanager.sh icon, which will open the yarpmanager GUI with all the necessary iCub applications

- In the iCubStartup_part_1 panel, launch iCubInterface. Warning: make sure that the iCub is vertical before launching this software, otherwise the robot will fall down (dangerous)

- Other panels: depending on which demo you want to execute, you will need to start other necessary drivers such as cameras or facial expressions

Shutting off the robot

Software side

- Stop your demo software and the cameras with the GUI; do not stop iCubInterface (in the iCubStartup panel) nor

yarpserver(in the Cluster Manager window) yet - In the iCubStartup panel(s) of the GUI, stop all modules, including iCubInterface. Chico will thus move its limbs and head to a "parking" position. (If things don't quit gracefully, stop or kill the process more times and be ready to hold Chico's chest since the head may fall to the front.)

- In the Cluster Manager window, stop the instances of

yarprun, and finallyyarpserver

Hardware side

- Turn off the two green switches. Pay attention when turning off the 'Motors' switch: if iCubInterface was not stopped properly in the previous steps, then be ready to hold the robot when turning that switch

- Turn off the Xantrex power supply units

- Turn off the iCub laptop

- If necessary, turn off other machines (such as portable servers during a demo outside ISR) and the UPS

Stopping the robot with the red emergency button

The emergency button, as the name suggests, is to be used for emergencies only. For example:

- When the robot is about to break something

- When some components make nasty noises that suggest they are going to break

Use this button with great care, as it cuts power to all motors and controllers abruptly! In particular, be ready to hold Chico from his chest, because the upper part of his body might fall upon losing power.

To start using the robot again, it is convenient to quit and restart all the software components and interfaces; refer to #Switching on the robot for that. Don't forget to unlock the red emergency button after an emergency, otherwise the program #iCubInterface will start but not move any joint.

Starting YARP components

See Cluster Management in VisLab for background information about this GUI (not important for most users).

- Click on the cluster_manager.sh icon on the desktop and select 'Run in Terminal' (or 'Run' if you want to suppress the optional debug information terminal)

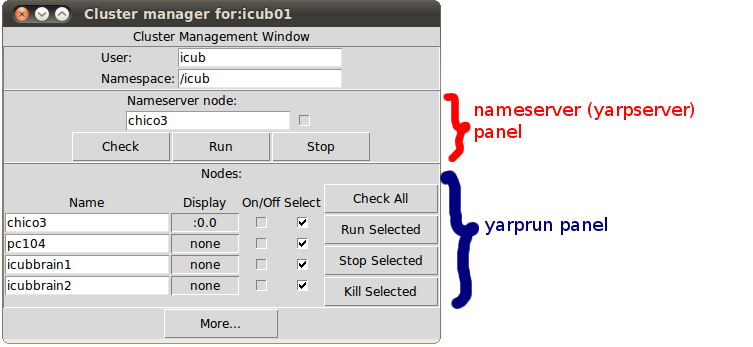

- You will see the following window, divided in two main components:

yarpserverandyarpruns (the former is a global, single-instance nameserver; the latter are various instances of network command listeners, one per each machine involved in the demos)

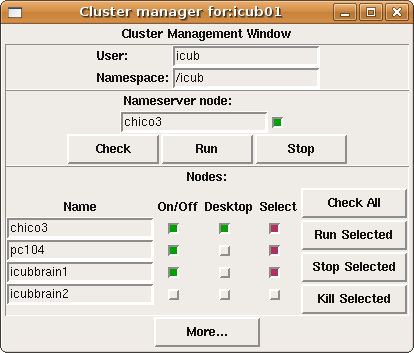

- In the yarpserver panel, click on the 'Run' button. The light above the 'Stop' button will become green.

- In the yarprun panel, choose the following four machines in the 'Select' column (icub-laptop, pc104, icubbrain1, icubbrain2), then click 'Run Selected' and wait a bit so that all machines can turn on their green 'On' light.

Do all the selected machines have their 'On/Off' switch green by now? If so, proceed to the next step. If not, click on 'Check All' and see if we have a green light from the pc104 at this point. You should see something similar to this, actually with all the required machines showing a green 'On' light:

Other components

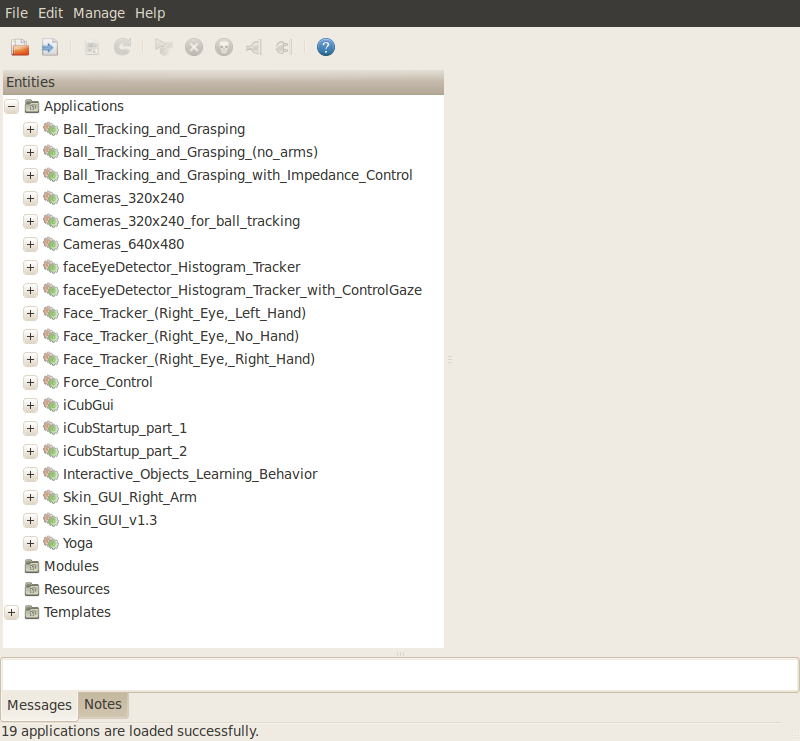

Many demos and programs assume that components such as cameras, the iCubInterface driver or the facial expression driver have been launched. To start them, first of all run the yarpmanager.sh icon. A GUI similar to this one will appear:

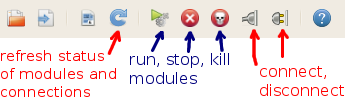

When you have one or more applications running, each one will have its panel (tab) and the following toolbar will be visible. Here are the most important functions (which affect all modules of the currently selected application):

iCubInterface

This program controls the motors and reads the robot sensors (encoders, inertial sensor, skin, force/torque). It is needed by almost all demos.

- check that the red emergency button is unlocked

- open the iCubStartup_part_1 panel in the yarpmanager GUI; click the Run Application button (this will start the kinematics modules: iCubInterface, cartesian solvers and gaze control)

- open the iCubStartup_part_2 panel in the yarpmanager GUI; click the Run Application button (this will start the dynamics modules: wholeBodyDynamics and gravityCompensator)

Wait for all boards to answer (which takes around 1 minute); after that, you are ready to move on.

There is a GUI application to manually command robot joints. Just invoke it from the iCub laptop with:

robotMotorGui

Cameras

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components

- open the Cameras_320x240_for_ball_tracking panel in the yarpmanager GUI; click the Run Application button; click the Connect Links button

Facial expression driver

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components

- open the Face_Expressions panel in the yarpmanager GUI; click the Run Application button; click the Connect Links button

Note that the actual expression device driver (the first module of the two listed) runs on the pc104. Sometimes, that process cannot be properly killed and restarted from the graphical interface; in the event of you needing to do that, you can either kill -9 its PID, or do a hard restart of the pc104.

iCubGui

This (optional) component shows a real-time 3D model of the robot on the screen.

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components

- open the iCubGui panel in the yarpmanager GUI; click the Run Application button; click the Connect Links button

Skin GUI

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components

- TBC

Specific demos

Refer to iCub demos/Archive for older information such as starting demos from terminals.

Ball tracking and grasping

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components

- Make sure that the applications iCubStartup_part_1, iCubStartup_part_2, Cameras_320x240_for_Ball_Tracking have been started

- Optionally, Face_Expression and iCubGui can be started too

- open the Ball_Tracking_and_Grasping_with_Impedance_Control panel in the yarpmanager GUI; click the Run Application button; click the Connect Links button

Note that this demo launches the left eye camera with special parameter values:

brightness 0 sharpness 0.5 white balance red 0.474 // you may need to lower this, depending on illumination white balance blue 0.648 hue 0.482 saturation 0.826 gamma 0.400 shutter 0.592 gain 0.305

Face tracking

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components

- Make sure that iCubStartup_part_1 (iCubInterface) and cameras are running

- Optionally, start the facial expression driver

- Select the faceTracking_RightEye_NoHand panel (or the Left/Right Arm version); start modules and make connections

Facial expressions

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components

- Start the facial expression driver

- Select the EMOTIONS2 icon (Run in terminal); stop with ctrl+c

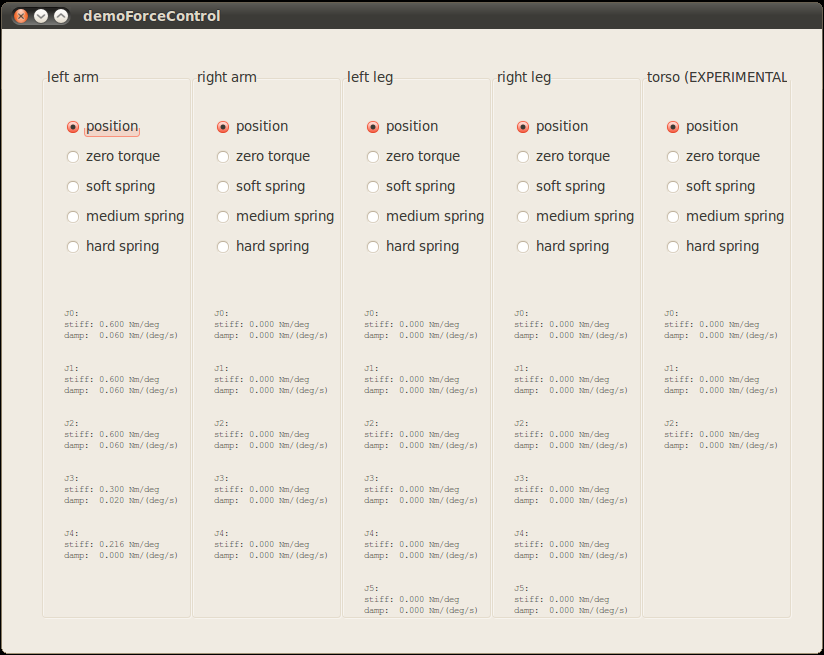

Force Control

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components

- Make sure that the applications iCubStartup_part_1 and iCubStartup_part_2 have been started

- Click on the Force_Control panel in the yarpmanager GUI, Run Application, Connect Links

- select the desired modality (screenshot below) and manually move the robot limbs:

- More information available here: http://eris.liralab.it/wiki/Force_Control

Interactive Objects Learning Behavior

For this demo, you also need a Windows machine to run the speech recognition module. The 17" Tsunami laptop is already configured for this purpose.

In Linux:

yarp cleanto remove dead ports- make sure that no IOL module is running in the background:

yarp name listshould be empty, if not remove any IOL module running

Turn on the Windows machine. On startup, it will launch a command prompt with a yarprun listener.

Back to the Linux machine, in the yarpmanager "Interactive Objects Learning Behavior with SCSPM" panel: refresh, run, connect.

The grammar of recognized spoken sentences is located at https://github.com/robotology/iol/blob/master/app/lua/verbalInteraction.txt

Yoga

- Make sure that basic YARP components are running: summarized Cluster Manager instructions are here; detailed instructions are in section #Starting YARP components. For this demo we only need

yarpserver(yarpruns are not necessary) - Make sure that iCubStartup_part_1 (iCubInterface) is running

- open the Yoga panel in the yarpmanager GUI; click the Run Application button