Universal Gestures for Human Robot Interaction (GESTIBOT)

- Orientador: Prof. Alexandre Bernardino

- Co-Orientador: Prof. José Santos Victor

- Acompanhante: Dr. Plinio Moreno

Work framed by the Humanoids Robotics Research Area of the ISR Computer and Robot Vision Lab (Vislab).

Keywords: Computer Vision, Human Robot Interaction.

Objectives

Exploit the knowledge about human attention studies to derive efficient pattern search mechanisms in object detection tasks. Application of the methods to a humanoid robot in order to interact with objects of interest in the scene with improves reaction times.

Description

Current object detection technology exhaustively searches the images for patterns of interest. Although such brute-force approaches may present good performance in terms of successful detections, they are often too computationally demanding because require the scan of the full image, independently of the task they are engaged on. Humans instead allocate their attentional resources ("computational power") to the most promising parts of the visual field, according to their current expectations. They are, therefore, able to react faster, in average, to the environmental events. In this work we will exploit the knowledge of humans visual attention to design artificial vision algorithms more efficient that existing ones.

Prerequisites

Average grade > 14. It is recommended a good knowledge of Signal and/or Image Processing, as well as Machine Learning.

Expected Results

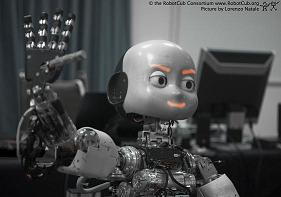

The algorithms will be implemented in the iCub robot platform. It is expected that the algorithms will allow faster reaction times and higher responsiveness to the external events that currently existing methodologies. The developed algorithms will also have an impact on the implementation of object detection algorithms (e.g. faces) in low power processors such as the ones existing in digital cameras, mobile phones, etc, possibly leading to commercial applications.

Related Work

Multimodal Saliency-Based Bottom-Up Attention A Framework for the Humanoid Robot iCub, Jonas Ruesch, Manuel Lopes, Alexandre Bernardino, Jonas Hornstein, José Santos-Victor, Rolf Pfeifer, 2008 IEEE International Conference on Robotics and Automation Pasadena, CA, USA, May 19-23, 2008. Link: http://www.isr.ist.utl.pt/labs/vislab/publications/08-icra-attention.pdf