Affordance imitation

Modules

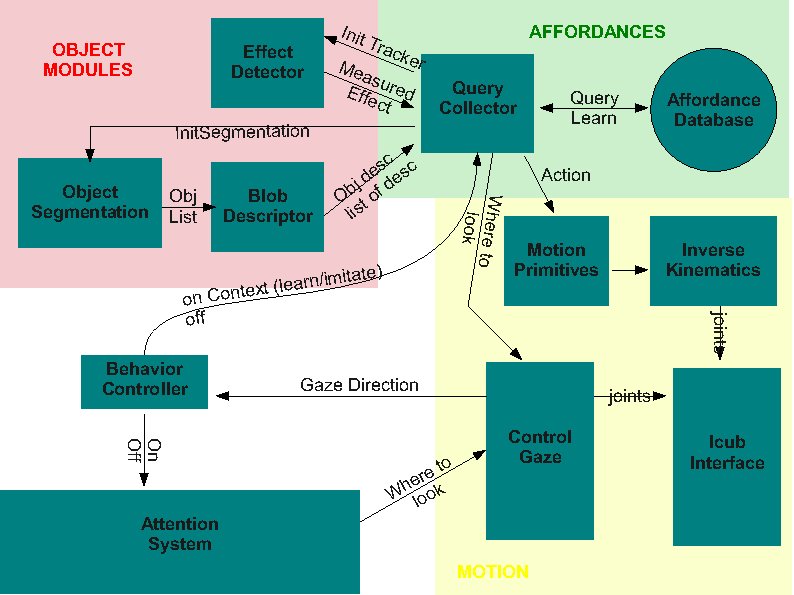

This is the general architecture currently under development

BlobSegmentation

Implemented by edisonSegmentation module in the iCub repository. Module that takes a raw RGB image as input and provides a segmented (labeled) image at the output, indicating possible objects or object parts present in the scene.

PORTS:

- /conf

- /rawimg:i

- /rawimg:o

- /labelimg:o

- /viewimg:o

BlobDescriptor

Implemented by blobDescriptor module in the iCub repository. Module that receives a labeled image and the corresponding raw image and creates descriptors for each one of the identified objects.

PORTS:

- /conf

- /rawimag:i

- /labelimg:i

- /rawimg:o

- /viewimg:o

- /affdescriptor:o

- /trackerinit:o

Ports and communication

The interface between modules is under development. The current version (subject to changes as we refine it) is as follows:

- Behavior to AttentionSelection -> vocabs "on" / "off"

- Behavior to Query -> vocabs "on" / "off"

We should add some kind of context to the on command (imitation or learning being the very basic).

- Gaze Control -> Behavior: read the current head state/position

- Query to Behavior -> "end" / "q"

- Query to Effect Detector. The main objective of this port is to start the tracker at the object of interest. We need to send at least:

- position (x,y) within the image. 2 doubles.

- size (h,w). 2 doubles.

- color histogram. TBD.

- saturation parameters (max min). 2 int.

- intenity (max min). 2 int.

- Effect Detector to Query

- Camshiftplus format

- blobDescriptor -> query

- Affordance descriptor. Same format as camshiftplus

- tracker init data. histogram (could be different from affordance) + saturation + intensity

- query -> object segmentation

- vocab message: "do seg"

- object segmentation -> blob descriptor

- labelled image

- raw image